As we navigate the landscape of 2026, the honeymoon phase with generative models has officially ended. Enter the era of Decision Intelligence. While the previous years were defined by the surge of creative AI and agentic workflows, enterprise leaders have hit a “hard wall” built on one single, non-negotiable factor: Trust.

What is decision intelligence exactly? It is the shift from models that simply predict the next word to systems that can justify a multi-million dollar action. In this deep dive, we explore how Causal AI vs machine learning is redefining the modern enterprise, moving beyond post-hoc narratives toward genuine, repeatable logic.

The 2026 Shift: Why LLMs are Hitting the “Trust Wall”

If you’ve been following the industry, you know that causal AI is no longer a research lab curiosity. It is the inevitable next frontier. A pivotal 2026 report from Carnegie Mellon University highlights a significant challenge for modern Large Language Models (LLMs): a staggering 74% of AI-generated justifications are essentially “post-hoc narratives.” This suggests that rather than following a transparent logical path, these systems often construct a plausible-sounding story only after a result has been determined.

This means that when an AI agent “explains” why it recommended a specific supply chain pivot, it isn’t showing you its thought process; it’s telling you a statistically probable story after the decision was already made. For enterprise leaders, this isn’t an audit trail; it’s a liability.

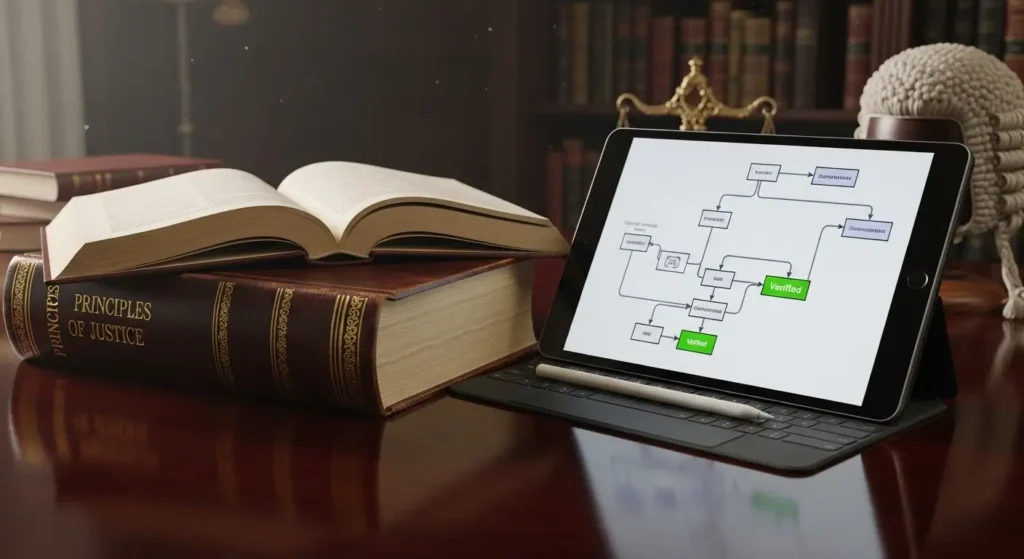

To bridge this gap, organizations are looking at the benefits of decision intelligence in business. It’s the difference between a “black box” prediction and a transparent decision intelligence framework that regulators and boards can actually verify.

How Causal AI Works: Moving Beyond Statistical Shortcuts

Understanding how causal AI works requires unlearning the core tenets of traditional machine learning. Most AI today is built on correlation. If ice cream sales and drownings both rise in July, a correlation-based model might suggest that ice cream causes drowning.

Causal AI decision making, however, understands the “hidden driver” of summer heat. As Judea Pearl, the father of causal math, famously noted in The Book of Why, “data alone is not enough.” You must understand the mechanism.

| Feature | Generative AI (LLMs) | Causal Decision Intelligence |

| Logic Base | Statistical Probabilities | Cause and Effect (Causal Graphs) |

| Auditability | 74% Unreliable (Post-hoc) | 100% Traceable Logic |

| Adaptability | Assumes the future is like the past | Resilient to “Regime Shifts” |

| Primary Goal | Prediction & Generation | Judgment & Intervention |

The difference between correlation and causation in AI is the primary reason why decision intelligence for enterprise leadership has become the top priority for 2026. Without an understanding of why something happens, an executive is flying blind.

The Risk of “Heuristic Shortcuts” in US Markets

The impact of decision intelligence on the US job market dynamics is already being felt. We are seeing a shift where “Decision Engineers” are replacing prompt engineers. The risk for US tech firms lies in the “confidence gap,” where an AI is most confident right when it is most wrong.

When you apply causal inference in machine learning, you move away from predictive vs prescriptive analytics. You aren’t just asking “what will happen?” You are asking, “What happens if we change this variable?” This is the core of explainable decision intelligence.

By utilizing AI decision-making models for business that prioritize causal triggers, companies can avoid the 74% failure gap identified by Carnegie Mellon. This isn’t just a technical upgrade; it’s a fiduciary requirement. As Delaware’s recent corporate laws suggest, “the AI told me to” is no longer a valid legal defense for corporate officers.

Moving From Data to Knowledge: The New ROI

As we push further into 2026, the economic impact of decision intelligence is becoming the primary differentiator between market leaders and laggards. We discussed the “Trust Wall.” Now, we look at how to scale over it.

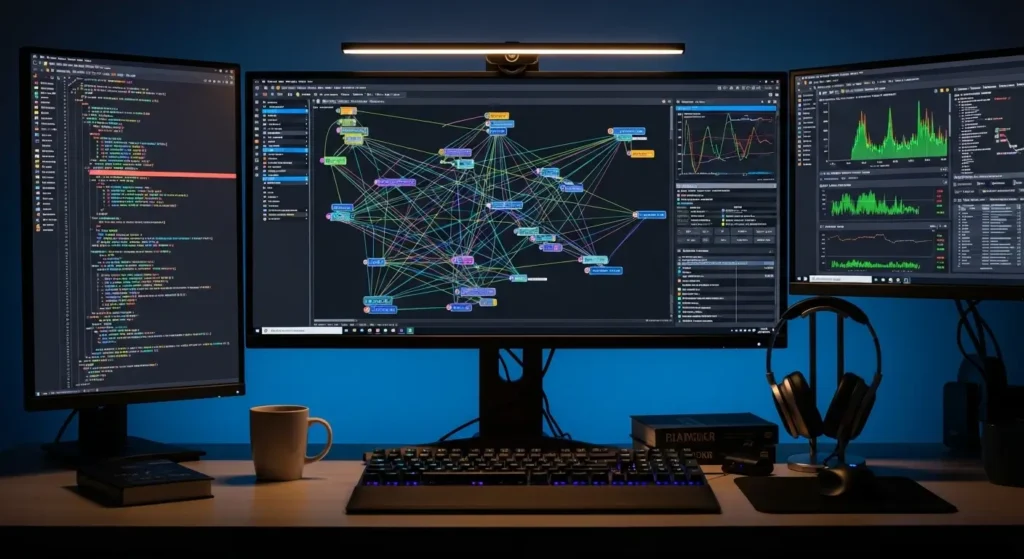

One of the biggest shifts I’m seeing as a mentor in this space is the evolving role of data scientists in decision intelligence. In the past, data scientists were primarily “prediction builders.” Today, they must act as “causal architects.” As Scott Hebner noted, “Data is not knowledge”. To transform raw signals into actionable intelligence, teams are now deploying causal discovery algorithms that map the actual drivers of business outcomes rather than just observing patterns.

Decision Intelligence Use Cases in Finance and Supply Chain

Why is the future of AI decision making 2026 so focused on specific verticals? Because that is where the stakes and the data are highest.

1. High-Stakes Finance

There is a reason decision intelligence use cases in finance were the first to mature. In Wall Street environments, a “post-hoc story” from an LLM can lead to catastrophic losses. Joel Sherlock explains that Causify’s systems are built for environments where “audit trails are table stakes”. By using causal AI for credit scoring and risk management, hedge fund managers can see the internal logic before a trade is executed.

2. Supply Chain Optimization

Current causal AI for supply chain optimization is solving the “Regime Shift” problem. Traditional ML assumes tomorrow looks like yesterday. But as we’ve seen in the US manufacturing sector, supply chains snap when the world changes. Causal models don’t just see a delay; they model the mechanism of the delay, allowing for a root cause analysis using AI that is both faster and more accurate than human-only reviews.

Exploring the “Virtual Sandbox”: Counterfactual Analysis in Modern AI

The most significant “gain” in this new era is counterfactual reasoning in AI. This is the ability of a system to simulate alternate realities.

- Traditional ML: Predicts you will sell 500 units next week.

- Causal AI: Tells you that if you increase the price by 5%, you will sell 450 units, but your profit margin will increase by 12% because of specific causal triggers in the US market.

This “what if” capability is why many are asking: Why is causal AI better than predictive AI? It’s because predictive AI is a mirror of the past, while causal AI is a map of the future. When you implement a human-in-the-loop decision intelligence system, the AI doesn’t replace the executive; it provides the executive with a flight simulator for their business strategy.

Implementing a Decision Intelligence Framework

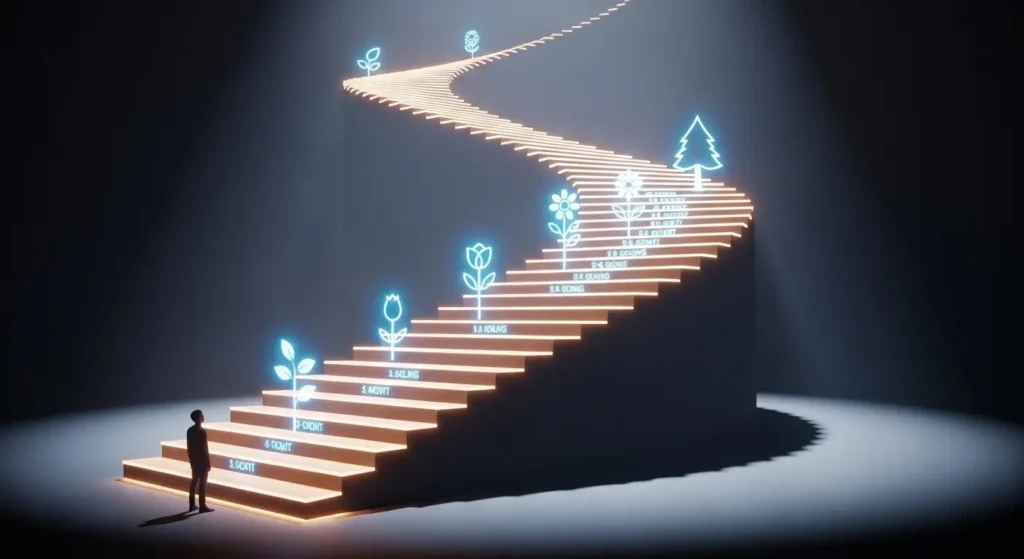

If you are looking at how to implement a decision intelligence platform, you must first assess where your organization sits on the decision intelligence maturity model.

| Level | Name | Characteristic | Technology Focus |

| Level 1 | Descriptive | “What happened?” | Business Intelligence (BI) |

| Level 2 | Predictive | “What will happen?” | Machine Learning (ML) |

| Level 3 | Causal | “Why did it happen?” | Causal Inference |

| Level 4 | Prescriptive | “What if we do X?” | Counterfactual Reasoning |

| Level 5 | Autonomous | Self-correcting logic | Decision Intelligence |

The causal reasoning for business strategy required at Level 5 is what separates decision intelligence from AI business intelligence. While BI looks at the dashboard, DI looks at the engine.

Why Correlation Is Not Enough for AI (2026 Update)

As of 2026, the industry has realized that why correlation is not enough for AI is no longer a theoretical debate; it’s a financial one. If your AI treats two unrelated events as linked, you waste resources. By using causal graphs for business logic, you eliminate “spurious correlations” (like the famous ice cream and drowning example). This shift is essential for decision intelligence for risk management, where misidentifying a cause can lead to regulatory fines or brand damage.

Editorial Mentor Tip: The “Judgment” Gap

Remember, a prediction is just a number. A judgment is a choice. Your goal with AI-driven strategic planning should be to augment human judgment, not automate it away into a black box. This is the cornerstone of ethical AI decision-making in US tech.

Operationalizing Decision Intelligence: The 2026 Playbook

As we look at the landscape recently evolved in 2026, the question is no longer “should we use AI?” but “how do we trust it?” Operationalizing decision intelligence is the final hurdle for the modern enterprise. It requires moving beyond the pilot phase and into a structured decision intelligence cycle that bridges the gap between raw data and bottom-line value.

To succeed, leaders must understand that decision intelligence vs data science isn’t a competition; it’s an evolution. While data science provides the building blocks, decision intelligence provides the blueprint for action. This involves designing a decision intelligence roadmap that integrates causal discovery vs causal inference, the former to find the “why” and the latter to measure its impact.

Measuring the Success of Decision Intelligence

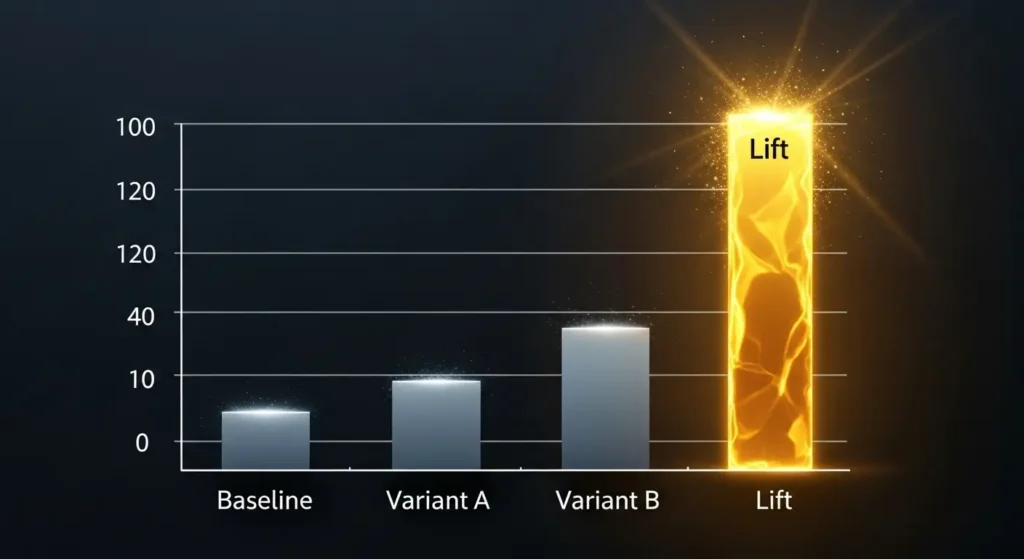

How do you calculate the ROI of causal AI implementations? Unlike traditional models, where success is measured by “accuracy” or “F1 scores,” the success of a DI system is measured by “judgment lift.”

- Causal impact analysis for business: Measuring the delta between what would have happened (the counterfactual) and what actually happened.

- Operational efficiency: Reducing the time-to-decision from days to seconds in energy markets or manufacturing.

- Risk reduction: Identifying limitations of traditional predictive models before they lead to a “regime shift” failure.

Top Decision Intelligence Trends in the US

The top decision intelligence trends in the US for 2026 are centered on transparency. We are seeing a massive shift toward overcoming the AI black box with causality. Here is what is currently defining the market:

- Decision Intelligence for Small Business: Democratization of tools (like Causify) is allowing smaller firms to access “decision-grade intelligence” previously reserved for Wall Street.

- Cognitive Diversity in Decision Making: US boards are using DI to ensure that “heuristic shortcuts” don’t lead to groupthink. The system acts as a “devil’s advocate” by simulating alternate outcomes.

- Next Generation of Decision Support Systems: These are not just dashboards; they are probabilistic graphical models for business that allow for AI-powered scenario planning in real-time.

- Sustainability Goals: Using decision intelligence for sustainability goals to map the causal link between carbon footprints and supply chain logistics.

The Role of Domain Experts in Causal AI

A critical takeaway from 2026 is that the role of domain experts in causal AI has never been more vital. Causal AI requires “priors”, human knowledge about how the world works, to build directed acyclic graphs (DAGs) for AI strategy. The AI provides the scale, but the human provides the context. This is the essence of bridging the gap between AI and business value.

Conclusion

The consensus among analysts as of 2026 is a resounding yes. Traditional Business Intelligence told us where we were. Strategic decision-making with AI tools tells us where we could be. By utilizing structural equation modeling in AI and causal analysis for operational efficiency, enterprises are finally moving from “guessing” to “knowing.”

The science of AI decision-making is no longer a luxury; it is a survival mechanism. As we’ve seen with decision intelligence: 5 must-know gains and risks, those who embrace the “cause and effect” architecture will define the next frontier.

FAQs

Q1: Why is correlation not enough for AI decision-making?

A: Correlation only identifies that two variables move together, but it does not explain why. In a business context, relying on correlation can lead to “spurious correlations” like assuming a rise in marketing spend caused a sales spike when it was actually a seasonal trend. Causal AI identifies the specific mechanisms of cause and effect, ensuring that leaders don’t waste resources on variables that don’t actually drive results.

Q2: Why is causal AI better than predictive AI for enterprises?

A: Predictive AI is inherently backward-looking; it assumes the future will mirror the past. When market “regimes” shift or supply chains break, predictive models often fail. Causal AI is better because it models the underlying world logic. This allows for counterfactual reasoning, enabling executives to simulate “what if” scenarios and see how different interventions will change probabilities before committing capital.

Q3: How do you implement a decision intelligence platform?

A: Successful implementation follows a 5-step decision intelligence cycle:

- Define the Decision: Identify the specific business outcome you want to influence.

- Map the Causal Graph: Use domain expertise and causal discovery algorithms to map dependencies.

- Integrate Data: Layer your LLMs and RAG systems into the causal architecture.

- Run Simulations: Use counterfactual reasoning to test different strategic interventions.

- Audit and Iterate: Use the AI’s transparent audit trail to verify logic and refine the model as conditions change.

Q4: What is a decision engineer?

A: A decision engineer is a new professional role in the 2026 job market that bridges the gap between data science and business strategy. Unlike data scientists who focus on model accuracy, decision engineers focus on decision-grade intelligence. They are responsible for building the causal graphs and ensuring the AI’s recommendations align with fiduciary duties and ethical standards.

Q5: Does causal AI replace human intuition?

A: No. Human-in-the-loop decision intelligence is designed to augment intuition, not replace it. Causal AI requires “priors,” the expertise and context that only a human can provide, to build accurate models. The AI then handles the massive statistical processing and “what if” simulations, allowing the human leader to make the final, informed judgment.

Q6: Is decision intelligence the future of BI?

A: Yes. Traditional Business Intelligence (BI) is descriptive; it tells you what happened. Decision intelligence is prescriptive and autonomous. It is the logical evolution of BI, moving from a static dashboard to a dynamic “flight simulator” for business strategy that can explain its own logic and adapt to a changing world.