In the age of generative AI, the battle against ai misinformation is shifting from simple visual checks to digital forensics rooted in physics and code. This comprehensive guide, “Deepfake Detection: 5 Pro Tips & Why Humans Fail,” dives deep into the state-of-the-art techniques used by top researchers and journalists to identify synthetic media. Learn the fundamental flaw of ai its inability to master 3D physics and how to exploit it using pro tips like Shadow Analysis (lighting consistency) and Vanishing Point Geometry. Crucially, we reveal the sobering truth: why human intuition and simple checklists are guaranteed to fail against high-quality fakes.

Discover the essential role of ‘active’ deepfake detection methods like imperceptible watermarking (Google’s SynthID) and Content Provenance standards (C2PA) that verify content origin. Equip yourself with the knowledge to navigate the digital age, understand ai content authenticity standards and see why professional-grade ai detection for journalists is now the only reliable defense against the pervasive threat of fabricated content.

The Fundamental Flaw: Patterns vs Physics

To understand how to detect ai generated text, images or video, you first have to understand how the adversary operates. Generative AI is fundamentally a pattern-matching machine. It learns how to create content by analyzing billions of images, audio clips and text documents.

However, there is a critical gap in its “knowledge.” While it knows what a shadow looks like in a painting, it doesn’t understand what a lens is. It doesn’t comprehend the laws of physics, geometry or the three-dimensional nature of our world. It isn’t recreating the physical reality you and I inhabit; it is predicting the next pixel or word based on probability. This lack of physical grounding is exactly where AI misinformation detection tools, deepfake detection systems and human forensic experts find their opening.

The Landscape of Synthetic Deception

Before we dissect the visual anomalies, we must address the sheer breadth of the problem. Identify ai generated content is not just about spotting a six-fingered hand anymore. The scope includes:

- Deepfake Text Detection: Large Language Models (LLMs) can churn out infinite streams of persuasive articles.

- AI Generated News Detection: Synthetic news anchors reading fabricated scripts.

- Audio and Voice Cloning: Replicating a CEO’s voice to authorize fraudulent transfers.

While this article focuses heavily on visual forensics where physics gives us the strongest clues the principles of skepticism apply everywhere. For instance, how to detect ai generated text often involves looking for a lack of semantic depth, much like looking for a lack of physical depth in images. While an ai text detection tool might analyze syntax probability, visual detection analyzes light and space.

Why Deepfake Text Detection Matters

The spread of detecting AI fake news often starts with the written word. Algorithms designed for deepfake text detection look for statistical regularities that humans rarely produce and many of these systems now integrate deepfake detection principles. If you are trying to identify AI-generated content in written form, you are often fighting against a machine that has read more books than any human ever could. How to spot AI-written content has become a primary focus for researchers, leading to the development of the best AI content detector 2025 candidates, which combine linguistic analysis with fact-checking cross-references.

But unlike text, where the “truth” can be subjective or stylistic, images have to obey laws, the laws of physics. And AI is a notorious lawbreaker.

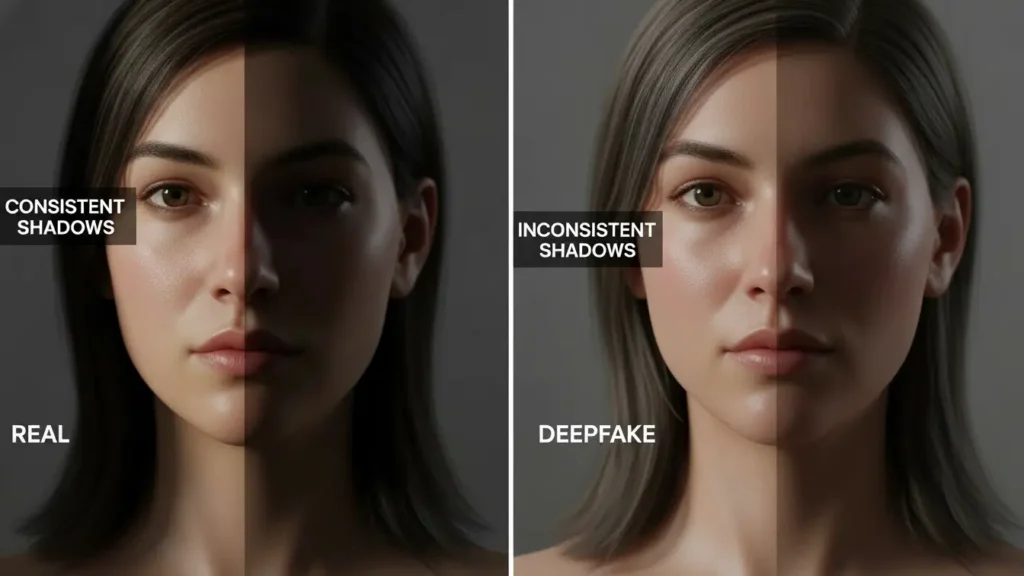

Pro Tip 1: The Shadow Analysis (Lighting Consistency)

One of the most powerful ai generated misinformation detection techniques comes from simple observation of the natural world. If you step outdoors on a sunny day in Virginia, you will see shadows cast by trees, cars and buildings. Because there is a single dominant light source the Sun all those shadows must obey a specific geometric law. They must converge and be consistent with that single light source.

Current AI disinformation detection research shows that AI struggles here, especially in deepfake detection. When an AI generates an image, it does so in 2D space. It is painting a picture based on other pictures, not simulating a 3D environment. Consequently, it often places shadows that suggest multiple light sources or impossible angles.

The Geometric Test

Forensic tools used in automated misinformation detection can mathematically trace a point on a shadow back to the object casting it. If you do this for every shadow in the scene and the lines do not converge on a single source (the Sun), the scene is physically implausible.

An AI fake news detector analyzing a photo of a politician might flag it simply because the shadow of the nose doesn’t match the shadow of the collar. To the casual human eye, it looks like a “pretty picture.” Our visual system is forgiving; we are used to paintings where artists cheat physics for dramatic effect. But an AI content detector algorithm is not forgiving especially in deepfake detection. It demands geometric perfection and when that perfection is missing, it becomes a strong signal of AI-generated image misinformation.

Pro Tip 2: Vanishing Points and Projective Geometry

Another sophisticated method for synthetic media detection involves the concept of the “vanishing point” a rule of perspective understood by renaissance painters for centuries but often missed by modern AI.

Imagine standing on railroad tracks (though, as the experts advise, please don’t actually do this). In the physical world, those tracks are parallel. They never touch. However, if you take a photograph of them, they appear to converge at a single point on the horizon due to projective geometry. This rule applies to any set of parallel lines: the top and bottom of a window, the sides of a tall building or the edges of a sidewalk.

The AI’s 2D Blind Spot

Because AI generators “live” in a 2D world, they struggle to maintain this geometric consistency across a complex image. Deepfake detection for text relies on syntax, but deepfake detection for images relies on these vanishing points.

If you analyze a synthetic image of a dining room, the parallel edges of the table, the rug and the window frames should all converge to a consistent vanishing point. In many ai misinformation examples, they don’t. The table might perspective-shift in one direction while the window shifts in another.

We can measure these deviations. When detecting bot-written articles, we look for logical inconsistencies. When spotting ai generated images, we look for geometric violations. If the vanishing points are scattered, the image violates the laws of projective geometry. It is a mathematical proof that the scene never existed in the physical world.

Beyond the Visual: Text and Authenticity

While physics is a silver bullet for images, ai authorship detection for text remains a cat-and-mouse game. Detecting bot-written articles often requires checking for “hallucinations” factual errors stated with high confidence.

The fight for AI content authenticity is pushing major tech platforms to adopt new standards. For instance, we are seeing the rise of AI watermark detection, deepfake detection and provenance standards. Tools like the Google SynthID detector are being designed to read invisible signals embedded into content at the moment of creation.

The Role of Provenance

Verify ai generated content is becoming a standard workflow in newsrooms. Just as a bank teller holds a $20 bill to the light to see the watermark, digital systems are beginning to look for ai content provenance metadata. This “active” detection is distinct from the “passive” analysis of shadows and geometry. It relies on a chain of trust knowing where the file came from and how it was packaged.

For researchers and journalists, the toolkit is expanding. We are moving from simple how to detect deepfake audio tutorials to enterprise-grade ai detection for journalists platforms that combine audio spectral analysis with visual geometry checks.

The Audio Frontier: Reverberation and Space

Speaking of audio, detect ai voice generated misinformation is the next major hurdle. Just as images must obey light, audio must obey space. If you are recording in a professional studio with soft walls, the reverberation (echo) is minimal. If you are in a cathedral, it is massive.

AI audio generators often mismatch these cues. You might hear a voice that sounds like it’s in a small room, but the background noise suggests a busy street. These acoustic inconsistencies are the audio equivalent of the “shadows” problem. AI misinformation research is heavily invested in training models to “hear” the shape of the room.

We are seeing new AI detection algorithms that specifically map the “impulse response” of a recording to see if it matches the alleged physical environment. NLP for misinformation detection handles the content of what is said, but acoustic forensics now increasingly used in deepfake detection handles the context of where it was said.

The Digital Signature: Moving Beyond Visual Cues

While the physical inconsistencies inherent in AI generation like flawed shadows and scattered vanishing points provide powerful, physics-based signals for deepfake detection, this passive analysis is only one half of the forensic battle. As generative models improve, closing the 2D-to-3D gap, detection must shift toward the digital ‘fingerprints’ the AI leaves behind.

This shift involves looking at data structure, active signals and the code used by the adversary. The effectiveness of machine learning fake news detection hinges on these highly technical, often invisible, traces.

Pro Tip 3: The Packaging Analogy (Metadata and Code Consistency)

When you receive a product from an online retailer, the product itself is in the box, but the packaging the tape, the custom box, the internal wrapping tells you who shipped it. This is the perfect analogy for digital files. When a generative ai generated content scanner like OpenAI or Midjourney creates an image, audio or video, it produces the raw pixels, but then it must “bundle it up” into a standard format like JPEG, PNG or MP4.

This bundling process, which we can call ‘packaging,’ is unique to each generator. The specific algorithms and parameters used by one AI service to compress and format the file are different from those used by another service and crucially, different from the algorithms used by a standard smartphone camera or Adobe Photoshop.

Forensic deepfake detection researchers can examine this underlying package the compression artifacts, the header information, the specific byte sequences to determine its origin. Even if the AI doesn’t have “package emulators” now, this is a race. Right now, looking at the packaging is a powerful, low-level technique. It is one of the best tools to detect deepfake media because it reveals the specific assembly line the content passed through.

Utilizing Machine Learning Fake News Detection

The task of fact checking ai generated content and assessing the digital packaging is perfectly suited for AI itself. A sophisticated AI detector is essentially a specialized machine learning fake news detection algorithm trained on millions of examples of how different sources (cameras, phones, Midjourney, DALL-E) package their files. It identifies minute, non-visual patterns that reveal fabrication. This is part of a comprehensive strategy for ai misinformation prevention, as it allows platforms to halt the spread of synthetics based on origin, not just content.

When trying to determine how to tell if article is ai generated, text analysis focuses on linguistic patterns. However, for visual media, file analysis focuses on statistical patterns embedded in the data structure. This is often more reliable than subjective visual checks.

The Active Defense: Embedding Trust

The passive techniques (shadows, geometry, packaging) are reactive; they analyze content after it has landed on the desk. The next phase in deepfake detection is the development of active techniques: embedding an unremovable signal at the point of creation.

Pro Tip 4: Imperceptible Watermarks (The Counterfeit Currency Model)

Think about physical currency. A $20 bill has security features watermarks and threads that are invisible until you hold it up to the light. These features prevent or make it extremely difficult to counterfeit.

Major tech companies like Google have announced that their generative products images, audio and video will now contain an imperceptible watermark, like SynthID. This feature provides a built-in signal that says, “We made this.”

This process is a game-changer for ai generated video detection and other high-impact synthetic media. The watermark is invisible to the human eye and resistant to common manipulations (cropping, compression, filtering). Only specialized software like the tools leveraged by researchers or law enforcement can detect it.

This active provenance is key for AI content verification tools. If the source decides, “We are going to watermark every piece of natural content,” then any media without a watermark instantly carries a red flag. This approach is a core part of effective AI fake news prevention strategies and directly strengthens deepfake detection efforts. Furthermore, if implemented widely, it can support AI-generated content regulation US policy requirements by providing clear accountability for synthetic media. While AI detection services USA offers vary, the move toward mandated watermarking is a global trend.

The accuracy of this technique is nearly 100% because the detection mechanism is specifically designed to recognize a signal it knows was placed there. Unlike ai detector accuracy based on statistical probabilities, watermarking is a binary proof of origin, instantly simplifying the challenge of detecting synthetic text.

Winning the Adversarial Game: Reverse Engineering

The detection war is fundamentally adversarial. For every defensive measure, there is an offensive effort to defeat it. This requires deepfake detection tools to not just look at the finished product, but to anticipate and analyze the adversary’s toolkit.

Pro Tip 5: Reverse Engineering the Adversary (The Bounding Box Flaw)

Many publicly available open-source deepfake libraries are used to create “face-swap” deepfakes. These tools follow a predictable, sequential process:

- Identify the original face.

- Pull the original face off (often by placing a square bounding box around it).

- Synthesize a new face.

- Replace it, blending the edges.

While the blending is often seamless to the human eye, the second step the bounding box can leave a residual digital scar. AI text forensic analysis is complex, but visual forensics sometimes reveals simple mechanical errors. Researchers can go into the raw video and use forensic methods to discover that subtle bounding box still exists in the video data, proving fabrication.

This approach involves training data for AI detection that specifically models the artifacts left by known generative software. By understanding the attacker’s code, detection labs can build sophisticated counter-measures. This strategy helps address the problem of AI hallucination detection in visual media and deepfake detection by identifying where the AI made a mechanical mistake it couldn’t cover up. This is a critical factor in understanding how accurate AI detectors really are.

The Policy and Strategy Shift

The increasing complexity of ai generated content identification demands a shift in public and institutional policy. The rise of ai misinformation trends 2025 from sophisticated ai fake profile detection used for influence to detecting ai fake reviews means that detection cannot be solely technical; it must be strategic.

Policy on ai generated misinformation is rapidly evolving, driven by the need to combat ai disinformation campaigns detection that target elections or critical infrastructure. Furthermore, as the tools become ubiquitous, accessibility becomes a priority. Having an ai content detection API or an ai detection browser extension is becoming standard for journalists and media analysts.

However, we must also acknowledge the ai content detection limitations. As models become better, the signals we rely on shadows, packaging, bounding boxes will disappear. This leads to the most important realization of all: the human element.

The Human Failure: Why Your Intuition Can’t Beat AI Misinformation

Despite the sophisticated detection techniques from physics-based analysis of shadows and geometry to digital forensics of packaging and watermarks the stark reality remains: the average person cannot reliably distinguish real content from synthetic content.

This is the central, sobering conclusion from experts in deepfake detection. An individual doomscrolling on social media your mother, a busy colleague or even yourself is fundamentally ill-equipped for this adversarial contest. The threat of ai misinformation lies not just in its creation, but in the false sense of security it breeds. Even if you understand the five pro tips outlined above, the speed and scale of modern deepfakes render human, real-time assessment obsolete.

The Speed-Accuracy Trade-Off

The algorithms used for ai text forensic analysis and visual checks are powerful, but they are also slow. They involve computational-intensive processes: calculating vanishing points, tracing light sources, checking for compression artifacts and scanning for imperceptible signals like ai fingerprint detection or ai content watermarking.

A human, by contrast, relies on intuition, which is trained by a lifetime of natural, unmanipulated media. The human visual system is forgiving, designed to process information quickly, prioritizing narrative and context over pixel-level accuracy. The minute inconsistencies a strange flicker, an unnatural blink rate or a mismatched shadow are often discarded by the brain in favor of the overall narrative, especially when dealing with content designed to trigger an emotional response.

The False Sense of Security

The greatest danger is not the deepfake itself, but the false sense of security that comes from believing you can spot one. As detection techniques are revealed, the adversaries creating synthetic propaganda patch the flaws. The five pro tips detailed are temporary. A generative model that fails the shadow test today will be updated to pass it next month. This constant, evolving arms race is why best practices for detecting AI content including deepfake detection are fluid and often require dedicated, institutional resources.

This rapid evolution means that any static checklist of “things to look for” becomes obsolete in weeks. The individual trying to detect manipulative synthetic text or a video based on an outdated visual cue might confidently label a real video as fake or worse, label a sophisticated fake as real.

AI Detection in Journalism and the Expert Divide

This leads to a clear and widening divide: the gap between public perception and expert capability.

For professionals, such as fact-checkers and editors, the process of ai detection in journalism is no longer a human task; it is an automated deepfake detection pipeline. These organizations employ tools that utilize multi-modal analysis checking visual geometry, acoustic consistency and digital provenance simultaneously. They rely on constantly updated training models and access to private research, including ai detection research papers and platforms that use ai detection open datasets.

The expert toolkit includes:

- Evaluating ai detectors and setting ai content detection benchmarks to ensure high ai detection precision recall.

- Using platforms optimized for ai-driven misinformation monitoring during critical events like elections.

- Consulting with certified professionals who provide ai detection certification and host ai misinformation workshops.

The average user simply does not have access to these sophisticated tools or the constantly changing knowledge required to use them effectively. This is why the expert conclusion is stark: if you are scrolling casually, you cannot trust your own eyes.

The Future of AI Content Authenticity Standards

Since human intuition fails, the solution must be technical and systemic. This future centers on ai content authenticity standards and active provenance, moving the burden of proof from the consumer to the creator.

The move toward embedding metadata and watermarks (like Google’s SynthID and the C2PA Content Credentials) is the only scalable way to provide ai generated content identification and fight detecting synthetic propaganda, especially in critical areas like ai generated political misinformation and ai detection for elections. This is the only way to build a digital ecosystem that is inherently trustworthy.

Best AI Detector for Researchers and Public Education

For those who must verify content such as educators, academic researchers and fact-checkers there are specialized, high-accuracy platforms.

- Ai detection for factcheckers often involves high-sensitivity tools designed to spot minimal traces of fabrication.

- Ai detection for educators is crucial for maintaining academic integrity, helping students how to verify ai generated images and understand the risks of synthetic sources. Resources like ai content detection tutorials and ai detection github projects are helping to democratize knowledge, but they are not a substitute for institutional policy and advanced tools. For example, a reliable, commercial tool like Originality.ai offers a focused suite of services, making it a strong contender for the best ai detector for researchers who prioritize high accuracy and detailed analysis.

Ultimately, the best defense is not better human detection, but widespread education (ai detection for educators) and a fundamental change in our relationship with digital content. We must move from an assumption of reality to a demand for proof of provenance.

FAQs:

Q1: Why are AI-generated images often geometrically inconsistent?

A: AI generators typically operate in a 2D space, learning patterns from existing images. They do not simulate the laws of 3D physics (like light behavior, shadow casting and projective geometry). This results in physics violations, such as inconsistent light sources or scattering vanishing points, which are detectable by advanced forensic tools.

Q2: How do experts use ai content watermarking for detection?

A: AI content watermarking (e.g., Google’s SynthID) embeds an invisible, imperceptible signal into the content (image, audio, video) at the moment of creation. This signal acts as a digital fingerprint or ai fingerprint detection mark. Specialized software can read this mark to instantly verify the content’s provenance and confirm that it was generated by a specific AI model.

Q3: Can I trust my eyes to spot a deepfake video?

A: No. Experts overwhelmingly agree that the average person cannot reliably spot a deepfake video simply by looking at it. Generative AI is constantly improving, rapidly patching the minor visual flaws (like abnormal blinking or facial artifacts). Relying on intuition provides a false sense of security. Reliable detection requires technical analysis like ai text forensic analysis or ai content watermarking checks.

Q4: What is C2PA and how does it relate to ai content authenticity standards?

A: C2PA (Coalition for Content Provenance and Authenticity) is a standards body developing technical specifications for embedding cryptographic metadata, known as Content Credentials, directly into digital media. This metadata provides a tamper-evident record of the content’s origin and modification history, serving as a basis for high ai content authenticity standards and helping users how to report ai generated misinformation effectively.

Q5: What are the ai content detection accuracy rates for text?

A: AI generated content detection accuracy rates for text are highly variable and models often struggle to achieve 100% reliability, especially as LLMs are refined to produce more “human-like” text. Many high-quality, free tools have accuracy rates around 60–70%, while specialized, commercial tools might claim higher, but no tool offers a perfect guarantee. This is a primary ai content detection limitations.